Code Review Guidelines — Beyond the “what”

What can Software Engineering research tell us about code reviews?

Code reviews and code inspections are highly efficient in fixing defects. A code review can identify from 20% to 35% of the defects, while a code inspection can identify from 45% to 70% of the defects (Caper Jones, Software defect-removal efficiency, IEEE Computer, April 1996, pp. 94–95, DOI 10.1109/2.488361, ISSN 1558–0814).

It is also much cheaper than finding defects with tests. One of the main reasons is that when you find a defect, you are already looking at the fault, the lines that need to be fixed. When you find a defect using tests, you just found the failure. Localizing the fault in the code usually takes a lot longer than actually fixing it.

Many exciting results are relevant to be shared. Many are related to the amount of time it takes to review a request and the odds of it being accepted. However, often these studies are based on open-source development, where a request may never be accepted. This blog is focused on the corporate environment, and if you are making a change, it will have to meet the standards to be approved.

In this context, I’ve selected a few of the most interesting findings.

Recommendations based on the scientific literature

-

Test-driven reviews: only ONE of the reviewers (max) should start the code review by reviewing the tests.

A study about test-driven code reviews revealed that reviewing the tests first increases the number of defects found on tests, doesn’t affect the number of defects identified in the code but decreases the number of findings related to code maintainability.

D. Spadini, F. Palomba, T. Baum, S. Hanenberg, M. Bruntink and A. Bacchelli, “Test-Driven Code Review: An Empirical Study,” 2019 IEEE/ACM 41st International Conference on Software Engineering (ICSE), 2019, pp. 1061–1072, DOI: 10.1109/ICSE.2019.00110. -

Rebasing: Rebasing after the code review affects the results negatively and should be avoided.

A 2019 paper published in the 19th International Working Conference on Source Code Analysis and Manipulation reports that rebasing the code invalidates the code changes over 34% of the time, tampering with the code reviews. Rebasing should be avoided or done before the code review.

M. Paixao and P. H. Maia, “Rebasing in Code Review Considered Harmful: A Large-Scale Empirical Investigation,” 2019 19th International Working Conference on Source Code Analysis and Manipulation (SCAM), 2019, pp. 45–55, DOI: 10.1109/SCAM.2019.00014. -

Reviewers assignment: Code reviews should be performed by at least two engineers who have not participated in the code development.

A study about code review practices confirms the expectations that code with less review coverage, fewer reviewers, and less experienced reviewers contribute negatively to post-release defects.

McIntosh, Shane & Kamei, Yasutaka & Adams, Bram & Hassan, Ahmed E.. (2015). An empirical study of the impact of modern code review practices on software quality. Empirical Software Engineering. 21. 10.1007/s10664–015–9381–9. -

Questions: As a reviewer, your questions are welcome. Avoid criticizing in the form of a question. Also, avoid rhetorical questions — if you have a point to make, be direct. What-if questions about possible scenarios are usually the most useful ones.

Another study examines the types of questions made by reviewers and how beneficial they are.

F. Ebert, F. Castor, N. Novielli and A. Serebrenik, “Communicative Intention in Code Review Questions,” 2018 IEEE International Conference on Software Maintenance and Evolution (ICSME), 2018, pp. 519–523, DOI: 10.1109/ICSME.2018.00061. -

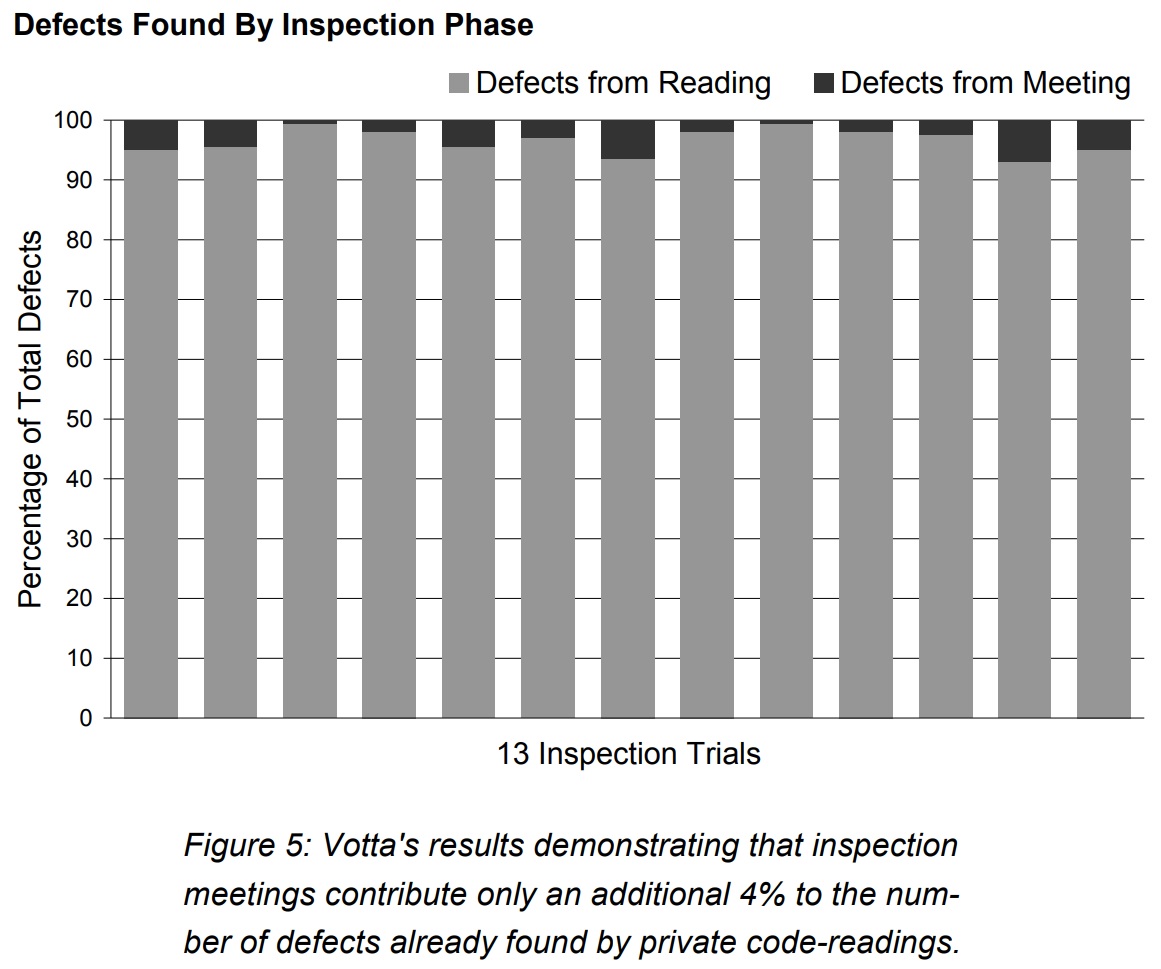

Review meetings: Code reviews are often done with reviewers reading the code individually or in meetings with or without the author. Meetings tend to identify false positives rather than find new defects. If both were conducted, meetings would identify only 4% more defects on average, according to the experiments. The conclusion from the study is that code review meetings don’t pay off.

The image below is from this paper that studied the two approaches:

Lawrence G. Votta, Jr., “Does every inspection need a meeting?”, Proceedings of the 1st ACM SIGSOFT symposium on Foundations of software engineering, p.107–114, December 08–10, 1993. DOI: 10.1145/167049.167070

-

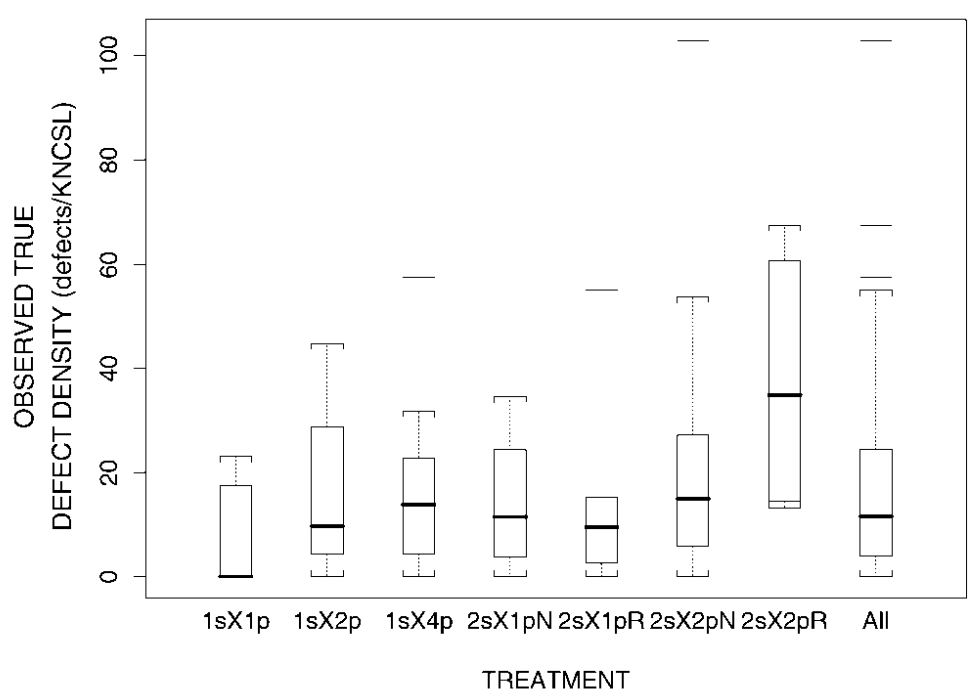

Number of reviewers: At least two independent studies conclude that 2 is the optimal number of reviewers.

Wee Land, L.P. et al. found that groups (mean 7.76) significantly outperform the average individual (mean 5.51) by an average of 29%.

Wee Land, L.P., Sauer, C., Jeffery, R. (1997). Validating the defect detection performance advantage of group designs for software reviews: Report of a laboratory experiment using program code. In: Jazayeri, M., Schauer, H. (eds) Software Engineering — ESEC/FSE’97. ESEC SIGSOFT FSE 1997 1997. Lecture Notes in Computer Science, vol 1301. Springer, Berlin, Heidelberg. DOI: 10.1007/3-540-63531-9_21A. A. Porter et al. realized a series of tests with 1 and 2 review sessions and 1, 2, and 4 people participating in each session (1sX1p,1sX2p, 1sX4p, 2sX1p, 2sX2p, and 2sX4p). There was no difference between two- and four-person inspections, but both performed better than one-person inspections. 2-session reviews were more effective than one-session only for one-person teams. However, there were no differences in effectiveness between one and two sessions when the number of reviewers was the same. The findings support that one session with 2 reviewers is the optimal scenario.

A. A. Porter, H. P. Siy, C. A. Toman and L. G. Votta, “An experiment to assess the cost-benefits of code inspections in large scale software development,” in IEEE Transactions on Software Engineering, vol. 23, no. 6, pp. 329–346, June 1997, DOI: 10.1109/32.601071.

A. A. Porter, H. P. Siy, C. A. Toman and L. G. Votta, “An experiment to assess the cost-benefits of code inspections in large scale software development,” in IEEE Transactions on Software Engineering, vol. 23, no. 6, pp. 329–346, June 1997, DOI: 10.1109/32.601071.

Gray literature

Gray literature comprises publications that did not go through peer review and are published in non-scientific texts, like whitepapers, blog posts (such as this), books (anyone can write and publish a book), Wikipedia, etc.

- Security: OWASP Code Review Guide

- Size of changes: The code should not exceed 400 lines. If it is longer than that, break the request into smaller parts.

-

Duration of review: The review should not take about 12 minutes for every 100 lines of code and no more than 1 hour in total. If it takes longer than that, take breaks, or you will find fewer issues.

A study on Cisco’s computer-based audio and video teleconferencing solution(MeetingPlace) was conducted in 2006 over a period of 10 months. 50 developers on three continents reviewed every code change before it was checked into version control. Data collected from 2500 code reviews indicate that a code review should not take more than 1h and should not have more than 400 lines of code. The pace of the review should be about 12 minutes for every 100 lines of code maximum. Anything more than that results in less effective code reviews.

My recommendations

-

Split the refactoring: If you refactored the code, it is strongly suggested that you create a separate review for the refactoring changes. The changes in a code review should have an identifiable intention, a goal. If you made just a few refactoring changes because you needed them to make the implementation work, it’s ok to leave it under the same code review.

However, if you decided to refactor other parts of the code that did not have to be changed necessarily, I’d recommend creating a separate code review for that refactoring. - WIP feedback: If the author feels that the feedback can help them decide how to implement the changes, I strongly encourage them to request an early review. An early review is not seeking approval to be merged, just feedback and suggestions.

- Responsiveness: Reviews should be done on the same day they were requested or the next day maximum, allowing the author to finalize his work, reduce context-switching, and deliver on time.

- LTGM/Approval: Make it clear if you mean that you are confident that the necessary changes will be done correctly or if you mean that the changes suggested are optional.

- Prioritize a Subject Matter Expert for that area of the code or component under change (as long as they are not the author), especially if the SME is a member of the same team as the author of the changes.

If the change is of high-risk, high-impact, or change design elements, I strongly advise having an SME as a reviewer, even when the SME is not a member of the same team.

What are your recommendations? Sound off in the comments! :-)

If you like this post, please share it (you can use the buttons in the end of this post). It will help me a lot and keep me motivated to write more. Also, subscribe to get notified of new posts when they come out.