Is the testing pyramid reasonable?

The origins and misinterpretations of the testing pyramid.

What is the testing pyramid?

In 2010, Mike Cohn published a book called “Succeeding with Agile — Software development using Scrum.”

Book Title

by Authors

Identifier

In his book, he presents a pyramid about defining a strategy around WHAT to test, but many believe it is about HOW to test.

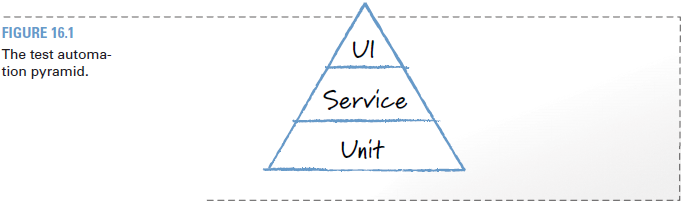

This is the pyramid (page 312):

First, I want you to observe that the pyramid does not contain the word “test” in it. It’s not “Unit test.” Therefore, it talks about what you should be testing, not how. It does not say that you need to focus on unit tests; it says you need to focus more on testing units.

In other words, the pyramid is UI/Service/Unit, not User level tests/Integration tests/Unit tests. Therefore, by saying “Unit,” the author is not referring to “unit tests” but to the units themselves — the finest granularity of your application. If these were “Unit TESTS,” at the same time as it says “Service,” the pyramid would mix responsibilities layers with test types.

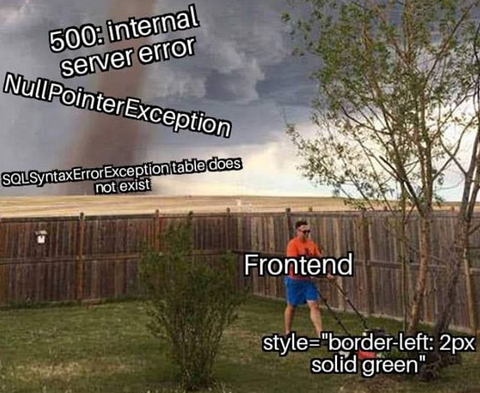

Whoever, with just a quick image search on Google, you can see how many people got it wrong (Martin Fowler has the correct image):

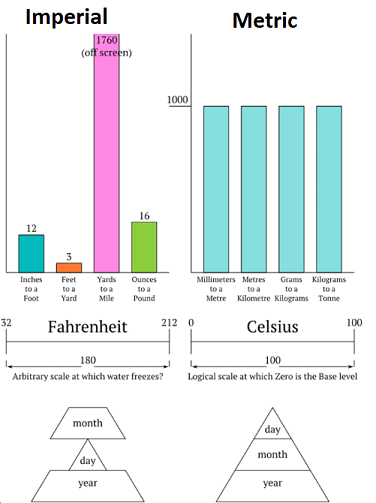

I understand the appeal of a pyramid and why so many people are comfortable with the pyramid that talks about how to approach testing. It makes intuitive sense to have a pyramid like that (mostly because it’s visually appealing and gives us a sense of “order”). Who doesn’t remember the joke about the imperial and the metric system that went viral a few years ago?

While visually appealing, both measurement systems work, and measures can be converted between systems.

In real life, we have different types of tests because each type has different effectiveness and catches different types of defects:

| Techniques | Effectiveness |

|---|---|

| Unit test | 15% to 50% |

| Integration test | 25% to 40% |

| System test | 25% to 55% |

Adapted from Caper Jones, Software defect-removal efficiency, IEEE Computer, April 1996, pp. 94 - 95, DOI 10.1109/2.488361, ISSN 1558-0814.

They also have different costs to write, execute, and maintain.

So, what did the author write about it, and what can we learn to define a more effective QA Strategy?

Analyzing what the book says

Testing units

This is a direct quote from the book:

“Unit testing should be the foundation of a solid test automation strategy and as such represents the largest part of the pyramid. Automated unit tests are wonderful because they give specific data to a programmer — there is a bug and it’s on line 47.” — Mike Cohn

That is easy to understand because a unit test is a test that isolates one unit from all the others. Your unit can be a method, a function, an entire class, a component, or a service. The developer (or team or architect) defines what a unit is. Once defined, the boundaries become clear, and whatever is out is part of another unit. To build a unit test, you must mock all those calls that cross the boundaries, eliminating the dependencies. This is what mock objects do.

If you decide that one of these interfaces with another unit will not be mocked, your unit is integrated with that dependency, making it an integration test. Yes, you can use your unit test framework to write integration tests by testing one unit directly and its dependencies indirectly. It doesn’t have to be all or nothing. You can write a test with some dependencies mocked and another test with other dependencies mocked.

While the author has a point and informing the exact line where the defect lies helps a lot, there’s no reason why an integration test can’t give you that. The problem is with the interpretation that service-level tests must be integration tests and that a unit can only be tested with unit tests.

Mike Cohn is telling us to “test units more” so you have more detailed information about the defects; he is not saying “write more unit tests.”

Mike Cohn also talks about unit tests:

“Also, because unit tests are usually written in the same language as the system, programmers are often most comfortable writing them.” — Mike Cohn

And even though developers are usually comfortable with more than one language, what he said is also true to integration tests.

Therefore, since integration tests’ minimum effectiveness is greater than the unit tests’ (because they are also capable of testing the contracts — the interfaces between units), units should be tested with both approaches. I’d go even further to say that you may be able to reduce the effort of writing tests if you have to mock fewer dependencies (by writing more integration tests and fewer unit tests in some circumstances).

That is not to say that you should replace your unit tests with integration tests. I’m saying that the number of tests of each type is not defined by the pyramid. You can have more unit tests or integration tests to test your units. And you will likely want a combination of both since they can capture different types of defects.

Testing the services

Here is a small disclaimer directly from the book:

“Although I refer to the middle layer of the test automation pyramid as the service layer, I am not restricting us to using only a service-oriented architecture. All applications are made up of various services. In the way I’m using it, a service is something the application does in response to some input or set of inputs.” — Mike Cohn

So, in this context, we will refer to the middle layer as a “services layer.”

What he says about it is:

“Service-level testing is about testing the services of an application separately from its user interface.” — Mike Cohn

It’s clear to everyone who reads it that he is talking about testing a specific layer, not applying integration testing. That means the unit responsible for doing something in response to inputs needs to be tested. If all this unit’s dependencies are mocked, we would be doing a unit test on a service. Whether or not it makes sense will depend on the context of the application, the service criticality, and the characteristics of the dependencies.

Usually, these classes are deprived of business logic, deferring the processing of the request to other units. This may be one of the reasons why integration tests usually provide greater value when testing this layer. If you have fewer unit tests at this level, you will likely have fewer tests in total, and by doing that, you will be following the pyramid he recommends.

Testing the UI

“Automated user interface testing is placed at the top of the test automation pyramid because we want to do as little of it as possible.” — Mike Cohn

That’s true. The problem is that these tests run slower, and different browsers and mobile devices may represent these screens with minor, irrelevant differences.

UI tests are essential to ensure UI elements are displayed correctly, validation at the UI is correct, and that use cases that may involve multiple screens (typical End to End tests) are working correctly. However, testing complex logic should be delegated to layers that don’t require rendering. Finding an error in the processing/business logic from the UI will incur a long fault localization time (time to find where the defect is). So the focus of the UI test should be on things that require the UI to be tested.

Bottom Line

There’s no silver bullet, no one-size-fits-all approach to software testing.

You really want to focus on what is critical and more complex and invest in testing it more thoroughly to have greater confidence that no defects are introduced there. Prioritize the quality, and then you optimize for your teams’ performance. If you think you can achieve the same level of quality with less effort with a different approach, then go for it.

Honestly, in ordinary information systems (data-centric software, standard relational database, forms, reports, etc.) I find it much faster to test with greater effectiveness to focus on integration tests (at the unit and service levels), cover the critical and complex units, and use case scenarios with unit tests at all levels. But this is a personal experience based on my observations; therefore, take it with a grain of salt. And, of course, different systems will have different characteristics and requirements. Different teams have different experiences and maturity regarding tests. I would consider all these variables if possible.

In these cases, when I don’t have enough information about the quality of what is coming out and the nature of the defects, my rule of thumb is the following:

- Integration tests for any methods that have business logic involved. This excludes “empty” getters and setters, code automatically generated by the IDE, and code that simply defers to another method (like some API endpoints sometimes just translate the call to a service class).

- Mandatory Unit tests (in addition to integration tests) in the following cases (all other situations should be at the developers’ discretion):

- The method is part of a critical business flow/use case.

- The method is part of regulatory requirements compliance.

- The method has cyclomatic complexity equal to 3 to 5 (above which the code must be redesigned).

So, I start with that, assess how well defects are captured (how many escape), and then work to adjust the approach based on the nature of the defects.

What are your thoughts on this? Do you also think the testing needs are more important than having a visually appealing strategy? Sound off in the comments below!

Cheers! :-)

If you like this post, please share it (you can use the buttons in the end of this post). It will help me a lot and keep me motivated to write more. Also, subscribe to get notified of new posts when they come out.